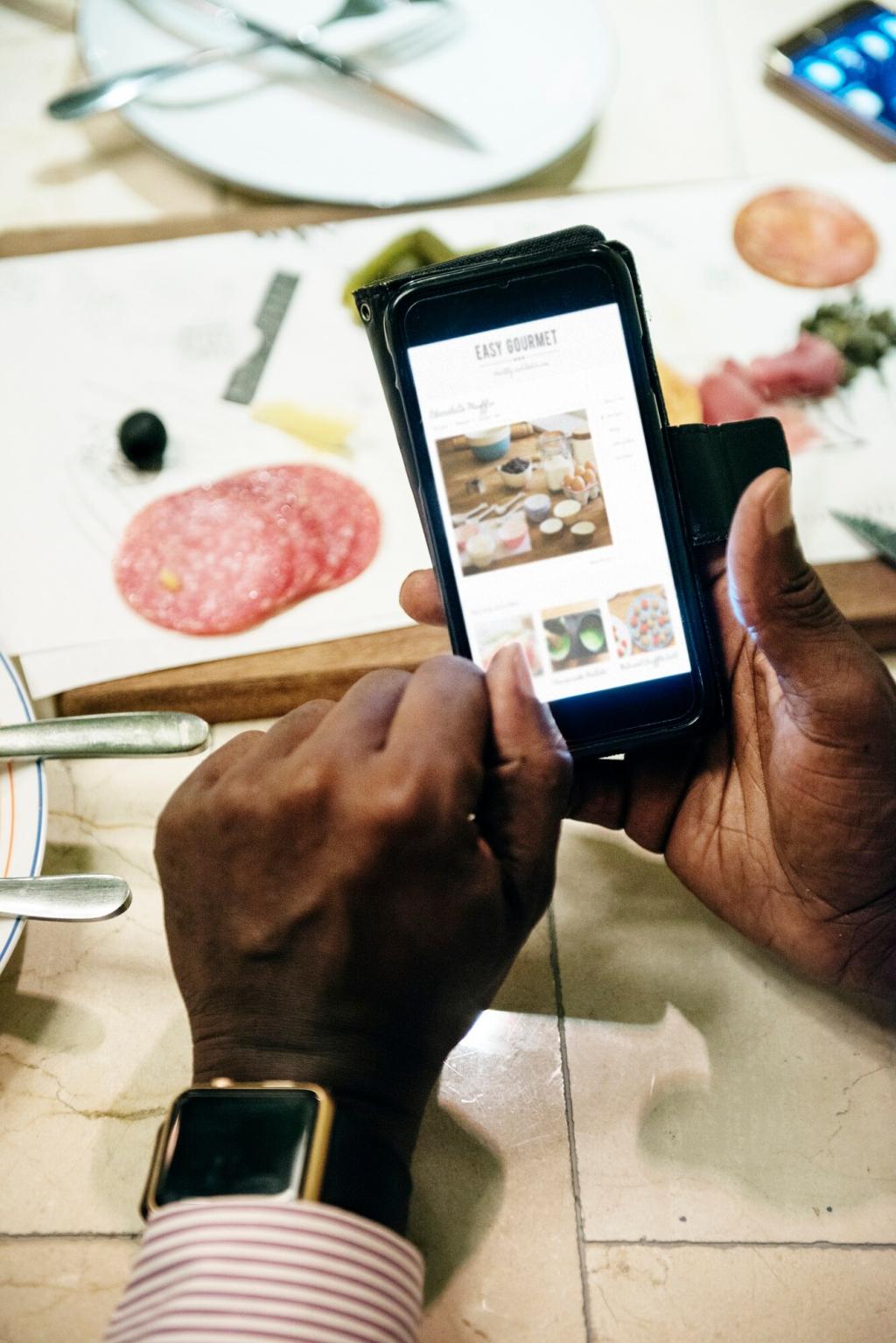

Laying the Groundwork: Strategy First, Devices Second

Map user journeys to measurable risks: sign-in reliability, purchase flow resilience, and session continuity. Tie each risk to an explicit test objective and exit criterion. This makes conversations with product and engineering concrete, enables faster trade-offs, and prevents endless test sprawl that slows mobile teams.

Laying the Groundwork: Strategy First, Devices Second

Balance lightweight unit tests, targeted integration checks, and critical end-to-end flows on real devices. Push logic into unit layers, keep UI automation lean, and isolate flaky dependencies. An intentional pyramid lowers maintenance costs and keeps signal high, especially when your mobile app evolves weekly.